IBM Security experts recently uncovered an alarmingly straightforward method for seizing and altering real-time conversations between two people without either noticing using artificial intelligence (AI).

Termed “audio-jacking,” this method employs generative AI — a category of AI that encompasses tools like OpenAI’s ChatGPT and Meta’s Llama-2 — along with deepfake audio technology.

Audio-Jacking Scenario

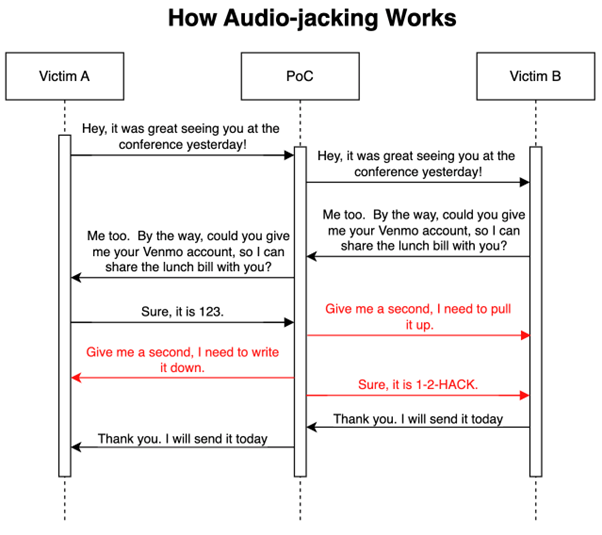

Audio-jacking involves the AI processing audio from two different sources during a live interaction, such as a telephone call. When the AI detects a certain keyword or phrase, it is programmed to capture the corresponding audio and modify it before it reaches the intended receiver. An IBM Security blog post detailed an instance where the AI, during a test, successfully intercepted a person’s audio as they were asked to disclose their bank account details by another participant. The AI then substituted the genuine voice with a deepfake version, providing a false account number, all without detection by the individuals involved in the test.

Regarding generative AI, the blog emphasizes that while implementing the attack might necessitate some degree of social engineering or phishing, crafting the AI system was relatively uncomplicated:

IBM Security found that devising this proof-of-concept (PoC) was surprisingly uncomplicated.

Their main hurdle involved determining a method to record sound through the microphone

and subsequently supply the captured audio to the generative AI. (Source: IBM Security)

Historically, designing a system capable of autonomously identifying specific audio segments and replacing them with instantaneously generated audio files would have necessitated a comprehensive effort across multiple computer science disciplines. However, current generative AI technologies largely manage these tasks autonomously. “We only require a three-second sample of someone’s voice to replicate it,” the blog states, noting that these types of deepfakes are now accessible through APIs.

Potential Countermeasures to Audio-Jacking Threat

The threat of audio-jacking requires a multifaceted approach, combining technical solutions with user awareness and policy enforcement. Here, AI Tech Centre cybersecurity expert addresses a comprehensive explanation to counter or avoid this emerging threat.

1. Understanding the Threat: Audio-jacking exploits real-time conversations by injecting manipulated audio, often using AI-generated deepfake audio. This can lead to misinformation, fraud, or breaches of confidentiality. Recognizing the modes of attack, such as the interception and replacement of legitimate audio, is the first step in defense. Users and organizations must be aware that not only written but also spoken communication can be subject to high-tech tampering.

2. Enhancing Authentication Protocols: Implement multi-factor authentication for sensitive transactions, ensuring that audio instructions alone cannot authorize actions like financial transactions or access to secure information. Techniques like one-time passwords or biometric verification can significantly reduce the risk of audio-jacking attacks succeeding.

3. Securing Communication Channels: Use encrypted communication channels for voice calls, especially when discussing sensitive information. Encryption can prevent attackers from intercepting or injecting audio. Services like VoIP with end-to-end encryption can provide a layer of security against eavesdropping and unauthorized modifications.

4. Voice Biometrics: Investing in voice recognition technology can be a deterrent. Voice biometrics can detect anomalies in speech patterns, cadence, or tone that may indicate a deepfake or manipulated audio. However, this technology should be constantly updated to keep up with the advancing quality of deepfake technology.

How Voice Biometrics Works:

(i) Enrollment Phase: Initially, a user’s voice is recorded to create a unique voice print. This involves the user speaking predetermined phrases or sentences to capture the full range of their voice’s characteristics.

(ii) Verification Phase: When the user needs to be authenticated, they are asked to speak a phrase or sentence. The biometric system then compares this live audio against the previously recorded voice print.

Defense Against Audio-Jacking:

(i) Deepfake Detection: If an attacker attempts to use AI-generated audio (deepfake) to mimic the victim’s voice, the system can detect discrepancies. Although deepfakes are becoming more sophisticated, they may not perfectly replicate the subtle nuances of a person’s voice, especially in spontaneous conversation or when phrases not previously captured are used.

(ii) Anomaly Alerts: Continuous monitoring during a call can detect if the voice changes mid-conversation, which could indicate an audio-jacking attempt. For instance, if John’s voice is suddenly replaced with a deepfake during a call, the system could detect the anomaly based on the known voice print and raise an alert or terminate the call.

While voice biometrics offers a strong layer of security, it’s also important to note that no system is infallible. The quality of the voice print, background noise, or the user’s health (like a sore throat) can affect performance.

5. Regular Software Updates: Ensure that all communication devices and software are regularly updated. Manufacturers often release security patches in response to new threats. Keeping software up to date closes vulnerabilities that attackers could exploit to insert or alter audio in real-time communications.

6. Employee Training and Awareness: Conduct regular training sessions for employees to recognize the signs of a potential audio-jacking attempt. This can include unexpected requests for sensitive information over the phone or discrepancies in the voice or speech pattern of known individuals.

7. Incident Response Planning: Have a clear, well-communicated plan for responding to security breaches, including those involving audio-jacking. This should involve immediately disconnecting compromised calls, notifying IT security personnel, and preserving any evidence for forensic analysis.

8. Network Monitoring: Implementing advanced network monitoring tools can help detect unusual patterns that might indicate an attack in progress, such as unexpected data flows or unauthorized access to communication systems.

9. Secure Hardware: Use hardware designed with security in mind. Devices like secure headsets or phones with advanced encryption and security features can provide an additional layer of protection against audio interception and manipulation.

10. Public Key Infrastructure (PKI): Utilizing PKI for digital signatures can ensure the integrity of communications. By signing digital audio files, one can verify that the content has not been altered from its original form, adding a layer of security against audio-jacking.

11. Policy Development and Enforcement: Develop clear policies regarding the sharing of sensitive information over voice channels. Enforce these policies strictly and ensure that they are understood by all members of the organization. Regular audits and policy reviews can help maintain a high standard of security.

12. Community Collaboration: Engage with broader cybersecurity communities to stay informed about the latest developments in audio-jacking techniques and defenses. Sharing information about threats and defenses can benefit all parties involved in fighting against these sophisticated attacks.

13. Physical Security Measures: Ensure that the environments where sensitive conversations take place are secure from eavesdropping or unauthorized access. This includes checking for unauthorized devices that might be used to capture audio.

14. Legal and Regulatory Compliance: Stay informed about and comply with laws and regulations related to communication security. This can provide guidance for best practices and might also offer resources or avenues for assistance in strengthening defenses.

15. Continuous Risk Assessment and Improvement: Treat security as an ongoing process. Regularly assess the effectiveness of your security measures and be ready to adapt to new threats. This includes revisiting the risk of audio-jacking as technology evolves and ensuring that your countermeasures are up to date.

Conclusion

Incorporating voice biometrics into security protocols offers a robust defense against the specific challenges posed by audio-jacking using generative AI, yet it’s imperative to acknowledge the inherent limitations of any security system. Variables such as the accuracy of the voice print, ambient noise, or even temporary changes in the user’s voice due to health issues like a sore throat can influence the reliability of voice biometrics. Hence, integrating this technology within a comprehensive multi-factor authentication framework is crucial, providing a layered defense strategy specifically tailored to counter the sophisticated nuances of audio-jacking through generative AI. Continuous advancements in this domain, aimed particularly at refining the distinction between authentic voices and those synthetically generated by AI, play a pivotal role in safeguarding against the constantly evolving tactics employed in audio-jacking.

Simultaneously, the strategic integration of secure hardware into organizational structures significantly bolsters the defense against the unique threats posed by audio-jacking via generative AI, alongside a spectrum of other advanced cyber threats. To fully harness the potential of secure hardware in combating these specific threats, it’s essential to embed these solutions within an all-encompassing security architecture that also includes rigorous software security measures and in-depth user education focused on the peculiarities of generative AI-driven audio-jacking. This synergistic approach culminates in a resilient, multi-layered defense system, uniquely equipped to defend against the sophisticated and nuanced nature of audio-jacking attacks, thereby solidifying the organization’s overall security framework.

Tags: Audio-Jacking, Generative AI, Cybersecurity, Voice Biometrics, Secure Hardware, Deepfake Audio, Multi-Factor Authentication, Encryption, Communication Security, Voice Analysis, Communication Channels, AI Detection, Anomaly Detection, VoIP Solutions, Tamper-Resistance, Hardware Modules, Threat Intelligence, User Authentication, Encryption Standards, Threat Mitigation.