Let’s be honest for a second—I see AI everywhere. It’s in our phones, powering chatbots, driving cars (kind of), and even writing articles like this one (I promise this isn’t fully AI-generated, okay?). We’ve all seen those flashy headlines claiming that AI will either save humanity or destroy it, but honestly, isn’t that giving this tech way too much credit? I mean, we’re talking about lines of code, not Zeus descending from Mount Olympus with lightning bolts.

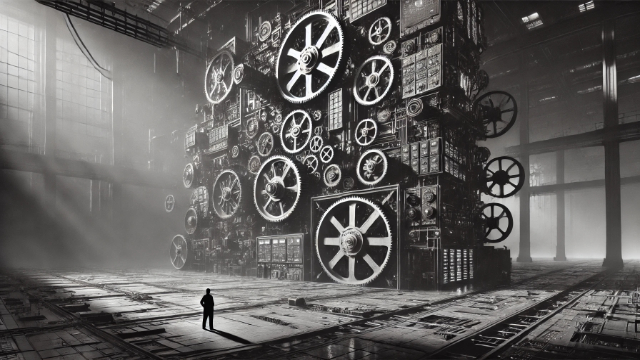

In his thought-provoking piece “No god in the Machine: the pitfalls of AI worship,” Navneet Alang (The Guardian) tackles this very issue—the idea that we’re starting to treat AI like some kind of omnipotent, god-like entity. And the kicker? It’s not even all that intelligent. Alang dives deep into the hype, fear, and misconceptions surrounding AI, pulling back the curtain on our fascination with it. So let’s unpack this together, laugh a little, and maybe get a tad existential.

The AI Obsession – Hype and Hubris

We’ve all been there—watching a sci-fi movie where AI takes over, builds an army of robots, and enslaves humanity. And let’s admit it, there’s a tiny part of us that thinks, “Could this actually happen?” That’s precisely the mindset Alang explores when he starts with Arthur C. Clarke’s tale of Tibetan monks who, with the help of technology, try to achieve humanity’s ultimate purpose. But here’s the twist: their grand achievement leads to nothingness. The stars start disappearing. It’s dramatic, right? It’s the perfect metaphor for our current AI worship—the belief that somehow, this machine learning stuff is going to solve all our problems or lead us to the brink of extinction.

But here’s the reality check: AI isn’t an all-knowing deity. It’s more like that one kid in class who’s great at finding patterns in the textbook but has zero original thoughts. Alang reminds us that these Large Language Models (LLMs), like ChatGPT, are really just glorified copycats—they piece together words and sentences based on patterns they’ve learned from tons of data. That’s why they’re good at mimicking human language but absolutely clueless about what they’re actually saying.

So, why do we keep treating AI like it’s the second coming of Einstein? It boils down to two things: our fascination with tech and a little bit of fear. We want to believe that AI can fix our problems, whether it’s solving world hunger, getting that perfect beach body, or finally teaching us how to fold a fitted sheet. And because we don’t fully understand how it works, we end up attributing god-like qualities to it.

The Danger of Tech Solutionism

Enter the “tech solutionists,” a group Alang loves to call out. These are the folks who genuinely believe AI is the answer to everything from global warming to, I don’t know, probably curing bad hair days. They’re like the Silicon Valley equivalent of those people who swear apple cider vinegar can cure anything. But Alang is quick to remind us that technology isn’t some magic wand. It can help, sure, but it can’t replace human judgment, morality, or that weird intuition we all have when we just know the milk’s gone bad even though the expiration date says it’s fine.

In fact, AI’s inability to truly “think” or possess genuine human qualities is a huge limitation. These models don’t feel empathy, they don’t ponder existential questions, and they definitely don’t know why pineapple on pizza is such a divisive issue (because it really is). So when we start thinking that AI is going to replace human decision-making entirely, we’re stepping into dangerous territory. It’s like handing over the keys to a self-driving car that doesn’t know when to stop for a squirrel.

The Illusion of Intelligence – How AI Fakes It

Now, here’s where things get interesting. Navneet Alang doesn’t just leave us hanging with a “don’t trust AI” warning. Instead, he pulls apart how these systems really work. Take ChatGPT, for example. It’s basically like the world’s best impersonator—it has no clue what it’s talking about, but it’s really good at sounding like it does. It strings together sentences based on patterns in the data it’s been trained on, which makes it seem like it’s having a coherent conversation. But in reality, it’s just winging it.

If AI were a person, it’d be that friend who nods along at the party, pretending to understand the deep philosophical debate about whether or not dogs actually dream. (Spoiler: they do, and it’s adorable.) But here’s the kicker: because AI mimics human language so well, we end up projecting intelligence onto it, giving it more credit than it deserves.

It’s like seeing a parrot repeat a phrase and thinking, “Wow, this bird really gets me.” When, in reality, Polly just wants a cracker.

The Pitfalls of Projection and Bias

The problem with treating AI as if it’s got a Ph.D. in life is that we start using it in ways that can be downright harmful. Alang mentions in “No god in the Machine” that AI systems often carry biases because they’re trained on data that reflects human prejudices. It’s like if your parrot started saying offensive things because it only heard rude people all day (not the bird’s fault, but yikes). This leads to real-world consequences, like AI being used in hiring practices and inadvertently favoring certain groups over others.

In short, AI reflects our imperfections, warts and all. And that’s a bit of a problem when we start using it as if it’s the ultimate decision-maker. So, how do we address this? Well, first, let’s stop pretending that AI is a magical oracle that knows all. It’s more like a really advanced calculator that sometimes blurts out nonsense.

AI as a Mirror – The Human Element

Here’s where things get philosophical, but stick with me—it’s worth it. Alang touches on the idea that AI, in many ways, is a reflection of us. It’s built on human data, trained on human language, and designed by, you guessed it, humans. So when we see intelligence in these systems, we’re really just seeing a reflection of ourselves.

But here’s the twist: AI lacks everything that makes us truly human. It doesn’t have emotions, a sense of morality, or that weird urge to eat an entire pint of ice cream after a rough day (just me?). And yet, we’re so eager to give it power over our lives. Maybe it’s because we’re looking for something to make sense of this chaotic, unpredictable world. Or maybe we’re just lazy and want a machine to do the thinking for us. Either way, it’s a bit like giving your GPS control over your entire life—sure, it’s great at directions, but it won’t help you navigate an existential crisis.

Solutions – How to Keep AI in Its Lane

Okay, so AI isn’t our savior, but it’s also not our doom. It’s somewhere in between, like a really helpful but slightly unreliable intern. So how do we make sure we’re using it wisely?

- Acknowledge Its Limits: First things first, let’s be real about what AI can and can’t do. It’s great for automating repetitive tasks or analyzing massive amounts of data. But expecting it to solve complex human issues? That’s like asking your Roomba to give you relationship advice.

- Ethical Oversight: We need to establish ethical guidelines and regulations to prevent AI from reinforcing harmful biases. It’s like setting ground rules for a toddler—if you don’t, chaos will ensue. And nobody wants an AI that thinks it’s okay to make biased hiring decisions or spread misinformation faster than a juicy piece of celebrity gossip.

- Human Collaboration: Rather than seeing AI as a replacement for human intelligence, we should treat it as a tool that can enhance what we’re already good at. Think of it as a helpful sidekick, like Robin to Batman. (Yes, we’re Batman in this analogy—deal with it.)

- Education and Critical Thinking: We’ve got to stop treating AI as this mysterious black box. The more we understand how it works, the less likely we are to be fooled by its faux-intelligence. Let’s pull back the curtain and reveal that it’s just a fancy algorithm, not an omnipotent being.

- Resist the Hype: Finally, let’s chill out on the whole “AI is going to take over the world” narrative. It’s not Skynet, and it’s not going to magically solve all our problems. The moment we stop worshipping it, we can start using it more responsibly.

Keeping Our Feet on the Ground

At the end of the day, AI is just a tool—a powerful, fascinating, occasionally frustrating tool. And while it’s tempting to fall into the trap of seeing it as some kind of technological messiah, we need to remember that it’s only as good as the people who build and use it. So let’s keep our expectations in check, laugh at its occasional absurdity, and maybe, just maybe, stop treating it like it’s capable of replacing the best (and worst) parts of being human.

Now, if you’ll excuse me, I’m off to ask my phone’s AI assistant why it still can’t understand my accent. Wish me luck.

AI’s Place in Society – Navigating the Hype and Realities

Now that we’ve unpacked the first half of this conversation, let’s dive deeper into how AI fits into our lives—and no, this isn’t going to be another lecture about how it’s stealing our jobs or plotting world domination. Instead, let’s talk about something a bit more nuanced: how we can coexist with AI without turning it into the latest cult we blindly follow.

AI in Our Everyday Lives: Friend or Enemy?

Ever asked Alexa what the weather’s like? Or let Netflix recommend your next binge-worthy series? Congrats—you’re already hanging out with AI. It’s sneaky like that, quietly embedding itself into our routines until it’s hard to remember a time without it. And in many ways, that’s not a bad thing! These little conveniences make life easier, like having a personal assistant who’s always there but never asks for a raise.

But here’s where it gets tricky. Alang points out that our willingness to let AI handle even the smallest tasks can lead to a slippery slope. We start relying on it for more and more, until suddenly, we’re letting it decide what news we read, who we date (thanks, Tinder algorithms), or even how we feel about ourselves. It’s a bit like that friend who started out as a fun drinking buddy but now, five years later, you realize they’re still crashing on your couch and eating all your snacks.

The Danger of Over-Reliance

The more we let AI creep into every facet of our lives, the more we risk losing the ability to think critically for ourselves. I mean, how many times have you blindly followed Google Maps, even though you had a sneaking suspicion it was taking you the wrong way? (Guilty as charged.) It’s not that the technology is inherently bad, but when we stop questioning it, we’re in trouble. That’s the moment when AI stops being a helpful tool and starts becoming a crutch. And trust me, nobody looks good hobbling around on a crutch they don’t actually need.

Solution: We need to cultivate a healthy dose of skepticism. It’s okay to let AI guide you, but don’t be afraid to push back, ask questions, or, heaven forbid, use your own judgment every once in a while. Treat it like you would treat any piece of advice from a friend: helpful, but not infallible.

The Human Touch – What AI Just Can’t Get Right

Let’s get one thing straight: AI is never going to understand the sheer joy of dancing in your kitchen at 2 a.m. while munching on cold pizza. It won’t grasp why you cry during that one episode of Friends (you know the one), or why you suddenly feel an existential crisis coming on when you think about the fact that penguins can’t fly. These moments—the messy, irrational, wonderfully unpredictable bits of life—are what make us human. And this is where AI falls flat.

In Alang’s “No god in the Machine,” he points out that while AI can mimic human language, it can’t replicate the human experience. It doesn’t feel things, it doesn’t make decisions based on gut instinct, and it definitely doesn’t have a mid-life crisis at the age of 35. It’s missing that little spark we like to call consciousness. And honestly? That’s kind of comforting. Because as much as we might want a quick fix for all our problems, there’s something beautiful about the fact that no algorithm can replicate what it means to be us.

Solution: Embrace your humanity. Make mistakes, get messy, and let yourself be imperfect. The more we lean into what makes us human, the less likely we are to hand over our lives to a machine that can never truly understand them.

AI and the Creative World – Can Machines Be Artists?

Now, here’s a hot topic that always gets people talking: AI in the arts. From writing poems to creating music and even painting pictures, AI has been making waves in the creative world, leading some folks to wonder, “Are machines the artists of the future?” Well, let me stop you right there—AI-generated content might be impressive, but it’s about as soulful as a cardboard cutout of Ryan Gosling. (Sorry, not sorry.)

What makes art, well, art, is the emotion, the vulnerability, and the messy, imperfect humanity behind it. When we look at a Van Gogh painting, we’re not just seeing brushstrokes—we’re seeing the pain, passion, and perspective of a man who was battling his own demons. AI doesn’t have demons. It doesn’t have joy, sorrow, or that weird sense of pride you get when you finally assemble an IKEA bookshelf on your own.

When we start treating AI-generated art as equal to human creation, we risk devaluing what it means to be an artist. Sure, it can replicate styles, analyze patterns, and create something that looks pretty on the surface, but it’s all smoke and mirrors—there’s no soul behind it.

Solution: Celebrate the imperfections in human creativity. Rather than being intimidated by AI’s ability to pump out perfectly polished pieces, let’s remember that art is about the process, the journey, and the heart that goes into it. The next time you hear about an AI-generated painting selling for millions, just smile and remember that it can’t ever know the thrill of an inspired, late-night creative breakthrough.

Ethical AI – Keeping the Machine in Check

This is where things get serious, folks. The biggest takeaway from Alang’s article is the reminder that AI is only as good—or as dangerous—as the people who create and deploy it. We’ve all heard the horror stories: AI systems with racial biases, algorithms that promote misinformation, or facial recognition tech that ends up in the hands of authoritarian regimes. Yikes, right?

It’s easy to get caught up in the excitement of what AI can do, but we’ve got to ask ourselves: just because we can do something, does that mean we should? AI’s potential to reinforce existing inequalities is very real, and without ethical oversight, we could end up amplifying problems instead of solving them.

Solution: Push for transparency, accountability, and regulation in AI development. We need to ensure that AI isn’t just something created in the shadows by a handful of tech giants with questionable motivations. Instead, let’s involve ethicists, policymakers, and diverse voices in the conversation. Because when it comes to wielding this much power, we can’t afford to cut corners.

The Future – What Happens Next?

So, where do we go from here? Alang’s article offers a sobering reminder that AI is not a magic wand, but it’s also not a ticking time bomb. It’s a tool, and like any tool, it’s up to us to decide how we use it.

We can choose to let it guide us, enhance our capabilities, and tackle the mundane so we can focus on what truly matters. Or, we can worship it, let it dictate our lives, and forget that behind every algorithm is a human decision that shaped it. The choice is ours, and it’s one we need to make carefully.

Final Thoughts: Keeping the Faith (in Humanity, Not AI)

AI is cool—there’s no denying that. But it’s not a deity, a savior, or the answer to all our problems. It’s a reflection of us: flawed, brilliant, and full of potential. And as long as we remember that, we’ll be just fine.

So let’s stop worrying about whether or not AI is going to take over the world, and start focusing on how we can use it to make this world a little bit better. After all, we’re the ones with the power to decide what happens next. And that’s a responsibility worth taking seriously.

FAQs

1. Is AI actually intelligent?

Nope, not in the way we understand intelligence. AI can mimic human language and recognize patterns, but it doesn’t have consciousness, emotions, or independent thought. It’s more like a really smart parrot—great at repeating things but not so great at understanding them.

2. Can AI be biased?

Absolutely! AI learns from the data it’s trained on, which means if that data contains biases (spoiler: it usually does), the AI will reflect them. That’s why it’s crucial to have diverse teams working on AI development and implement ethical guidelines.

3. Will AI replace human creativity?

Not a chance. While AI can replicate styles and generate content, it lacks the emotional depth, personal experiences, and vulnerability that make human creativity so special. It might be able to paint a picture, but it’ll never know the joy of creating it.

4. Should we be afraid of AI?

Fear? Nah. Cautious? Definitely. AI is a powerful tool, but like any tool, it can be used for good or bad. The key is to approach it with critical thinking, ethical considerations, and a healthy sense of skepticism.

And there you have it—AI, demystified, debunked, and definitely not the god we sometimes make it out to be. Let’s keep our heads on straight, folks. The future’s bright, and guess what? We get to shape it.

Key Takeaways on ”No god in the Machine”

- AI Is a Tool, Not a Deity

AI isn’t a magical, all-knowing entity—it’s just a sophisticated tool that can recognize patterns and mimic human language. Treating it as a god-like force leads to unrealistic expectations and can create unnecessary fear or hype. We should acknowledge its capabilities without attributing intelligence or consciousness where none exists. - Beware of Tech Solutionism

There’s a growing belief that AI can solve all of humanity’s problems, but this “tech solutionism” is misleading. AI is not a one-size-fits-all answer and cannot replace human judgment, empathy, or ethical decision-making. Relying on technology alone often ignores the deeper social, political, and ethical issues that require human insight. - AI Mirrors Human Biases and Flaws

AI systems learn from data that reflects human behaviors, biases, and inequalities. This means that if left unchecked, AI can perpetuate existing stereotypes, prejudices, and systemic injustices. It’s essential to address these biases and implement ethical guidelines to ensure AI is used fairly and responsibly. - AI Can’t Replace Human Creativity and Emotion

While AI can generate art, music, or writing, it lacks the emotional depth, personal experiences, and imperfections that make human creativity unique. Genuine creativity stems from the human experience—something AI simply can’t replicate. It’s crucial to appreciate and celebrate what makes human creativity distinct. - We Must Approach AI with Critical Thinking and Caution

The future of AI isn’t predetermined; it’s shaped by how we choose to develop and use it. To avoid falling into the trap of AI worship or fear, we need to approach it with a critical eye, ethical considerations, and a balanced perspective. This means engaging in thoughtful discussions about AI’s role, limitations, and potential impact on society.